All views expressed in this article are my own.

This article is also available on Kernel's website.

I've got some news. In June of this year, I moved to sunny Los Angeles to join Kernel as a Data Scientist. I left academia (again) to join the small group of engineers, scientists, designers and many more who have banded together to change the world by democratizing neuroscience. In this post, I want to share why I joined Kernel and what I've learned in the six months since doing so. I hope to shed some light on what Kernel is attempting to achieve, and perhaps help others who are currently at a crossroads in their professional lives (especially early-career scientists and engineers). So let's get to it!

Why I joined

A compelling mission (hint: it's not about control)

Working in the field of neurotechnology for the last ten years has been an incredibly exciting and rewarding experience, offering a first-row seat to a major shift from academic research and science-fiction towards exciting companies and products, big and small. Perhaps even more notable is the way the field has captured the public's imagination, spurring a myriad of discussions and debates around a wide range of topics, from augmentation to brain uploading, all the way to the nature of consciousness itself.

This earnest effort to turn decades of neuroscientific research into compelling products has spawned a vast (and ever-increasing) number of startups. A sizable share of these privately funded efforts focus on control (e.g. Facebook, Neuralink, Paradromics). The idea is compelling—replace the computer mouse with a mere thought (or flick of the wrist)—but is also limiting. Control is a fairly narrow problem. And the bar is set very high (for non-medical applications). Superseding the intricate machinery that is the hand is a tall order. There certainly will be, and already are, some specific (often niche) use-cases where the benefits of a hands-free neural control signal can make up for most of the limitations, but I think truly mainstream adoption is still somewhere over the horizon. That's not to say I don't believe in a future where control will be entirely mediated by neural interfaces. I think it's inevitable. Rather, the current focus on control may be distracting us from more immediate, and perhaps more exciting, applications.

This is where Kernel comes in. Kernel is openly and intentionally not pursuing control. In fact, the very nature of Kernel's first device, Flow (a functional near-infrared spectroscopy helmet that uses light to measure hemodynamic changes in the brain), makes it a poor choice for replacing your mouse. Instead, Kernel is going after something else: quantifying the brain.

It's been famously said that "what gets measured gets improved." Without dwelling on the merits of that particular quote, it does outline an important fact: we are blind to what we cannot measure. If your doctor doesn't know your blood pressure, they can never hope to fix it. Similarly, counting your steps or measuring your resting heart rate can lead to significant improvements in fitness and health. Regrettably, when it comes to the brain, we measure very little. Sure, we have expensive and difficult to interpret medical imaging. But we lack simple, widespread and easy to understand measures of brain activity and health. The brain doesn't have the equivalent of a blood pressure cuff (i.e. something you might measure once or twice a year in the right setting, like your doctor's office ). And the brain most certainly doesn't have the equivalent of a fitness tracker (i.e. something you might use at home, every day).

That's fundamentally Kernel's ambition: measure something of substance about the brain non-invasively and distill it into a metric that's as simple as possible, yet still informative. Even a single brain measure fitting the above description would be a game-changer—enabling us to inform, entertain, improve, guide, learn and grow. That's a clear and exciting mission, and one I was immediately drawn to.

Openness and humility

As a scientist and lifelong skeptic, I have been fairly transparent about my dislike of the neurobabble and hyperbole that is prevalent in the neurotech industry. While I can certainly appreciate the necessity for embellishment, I think there's a fine line between sales tactics and plain old nonsense—one too many happily step over.

Since I first started following Kernel, I was struck by the openness and humility with which they approach their ambitious goals. I have watched as Kernel leaned into and cultivated this philosophy, and I now consider it to be one of its greatest strengths. Internally, this approach ensures that decisions are made rationally and that scientific rigor is embraced. Externally, it builds trust within the neurotech community and beyond and serves as a template for other companies to follow. This thinking is also reflected in Kernel's commitment to giving users full agency over what happens to their personal and brain data—a refreshing position in today's tech landscape.

A recent and compelling example of this philosophy at work is the Flow U program, designed to put Kernel's device in the hands of scientists for a free year-long trial. If researchers don't find value in what Kernel has to offer, they can return the device at no cost.

Importantly, while Kernel's approach is marked by openness and humility, it does not lack in determination when it sets its sights on a target. Nor does it shy away from taking credit for its successes—which have been remarkable, as we'll touch upon later. To me, this represents the perfect balance and allows Kernel to set groundbreaking, yet realistic, ambitions.

A word about the academia-industry dichotomy

I had resolved not to stoke the flames of this infamous debate any higher. But the thought that it might help others—including my younger self—set a course in their professional lives with more confidence made me reconsider.

Some say the differences between industry and academia are overplayed, yet my experience has been that of two consistently different worlds. A lot of the obvious distinctions have become well-worn clichés by now and need no repeating (i.e. the money, work-life balance, etc). There is one, however, which I have encountered less often, yet was the most meaningful for me personally: the level and depth of collaboration.

Companies offer a much more collaborative, open and sharing working environment. The best explanation for this I can think of is that academia is ultimately a single-player game, where you—the researcher—are the brand and the product. My experience outside of academia has been starkly different. The goal alignment intrinsic to laboring together towards a clearly articulated goal lends itself to much more spontaneous, frictionless and genuine collaboration—and on much larger scales. While this is certainly a matter of personal preference, the feeling of belonging and cooperation stemming from this type of working environment can be highly fulfilling, and frankly: a lot of fun.

Luck, opportunity, and the windiness of life's path

In addressing the question of "Why I joined Kernel," it would be disingenuous not to acknowledge the role played by luck and opportunity. Yet I think there is an important lesson in this too. Life is hard to predict and plan for. Things go awry, surprises happen (I'm looking at you COVID-19), and reality gently brushes your plans aside.

But amidst the noise of circumstance, it pays to be prepared and it certainly pays to be persistent. In hindsight, I can see that the path that led me to Kernel was a long spiral, rather than a straight line. When I first heard of Kernel's existence, several years ago, I immediately applied. While that disappointingly didn't work out, learning about Kernel's progress—and writing about it on this blog—ultimately put me on a collision course with them. Without necessarily knowing it, the choices I made along the way prepared me to seize the opportunity when it came. Like Steve Jobs famously said: "You can’t connect the dots looking forward; you can only connect them looking backwards. So you have to trust that the dots will somehow connect in your future."

What I learned

A really compelling mission

I've explained why Kernel's ambitious mission was so compelling to me from the outside looking in. What I've learned since joining the company has deepened my appreciation for it. The brain is an unimaginably complex object that we are only beginning to understand. Despite our relative ignorance, we can already measure a remarkable number of things about it using various physical sensing modalities (electrical, optical, magnetic, acoustic, etc). Amongst these non-invasive approaches, Kernel's Flow device stands out by using an advanced optical measuring technique (time-domain functional near-infrared spectroscopy, which I explored in more depth here) that gives it unique advantages over more commonly used methods (such as electrical-signal based EEG).

These precise measurements will allow Kernel to define simple yet informative metrics that quantify the brain's health and state. While a simple low-dimensional measure will never describe the full complexity of a system like the brain, there are clear advantages to breaking down large, difficult-to-probe systems into digestible insights. Some familiar examples of (relatively) complex systems being reduced to accessible metrics include: resting heart rate (an indicator of cardiovascular health), heart rate variability (an indicator of both cardiovascular health and autonomic nervous system function), and body-fat percentage (an indicator of fitness and adiposity). Each of these examples allows entire companies (or divisions) to exist and can be measured at home with affordable and simple to use devices (smart scales, fitness trackers, etc). What if we had a handful of metrics like that for the brain (our most important and perplexing organ)? For instance, what if we had a metric that captured brain performance/ability, and one that captured brain health/aging. Wouldn't you want to know these two numbers for your brain and how they compare to others in your age group, or finally be able to measure the impact of your life choices on its health and performance? I know I certainly would!

These rigorous, yet simple to understand metrics could form the basis of a wide range of compelling applications in areas as diverse as self-improvement (e.g. neurofeedback), entertainment (e.g. adaptive video-games), mental health (e.g. monitoring, virtual reality therapy, etc), learning (e.g. real-time state monitoring), and many more.

While the appeal for individuals is clear, the current price and form factor put that slightly out of reach (although as I've written in the past, Kernel's ambition is to rapidly democratize access to these insights by having a Flow device in every home by 2033). Luckily, the very same metrics I described above can provide tremendous value to individuals without ever putting a device on their heads. We live in the era of social media, targeted ads and behavioral nudging, all of which have profound—yet often poorly understood and measured—impacts on our brains. With the tools at Kernel's disposal, we have the opportunity to identify and communicate how a given activity or product impacts your brain, using simple, validated metrics rather than obscure, difficult to replicate ad-hoc studies. Think of it as identifying fast food for the brain so you can steer clear.

Taking this line of thought further, we can imagine how forward-thinking companies might agree to go through a certification process akin to an organic label for the brain, designed to verify that their experiences and products have a positive or neutral impact on the brain. Armed with this knowledge, consumers might begin making more deliberate choices about what they expose their brains to and companies might finally have the incentive to take mental health and wellbeing into account early in the design process.

All of this is easier said than done. But Kernel's strength isn't that it has all the answers (although we have some ideas 🤫). Rather, Kernel's strength is that it is currently the best-positioned player—by a long shot—to get to those answers. I'll expand on why I believe that to be the case next.

World-leading team and expertise

I've mentioned my affinity for the collaborative spirit of startup companies. At Kernel, this is magnified by the exceptionally talented individuals it is made up of. While my sample size is modest, I can safely say that I have never felt surrounded by as much competence and brilliance as I am at Kernel. One of the most difficult challenges a startup faces is assembling a world-class team while safeguarding its values and unique character. Kernel has done a tremendous job at this.

What is perhaps even more remarkable is that Kernel has achieved this as a full-stack company—one which deals in everything from circuit design, mechanical prototyping, and software engineering to machine learning, neuroscience, cloud analysis, and more. Very few places (companies or otherwise) can say they have a state-of-the-art device that is entirely designed, assembled, tested and then deployed as part of large scale, rigorous neuroscientific research studies—all in the same building.

The quality and breadth of expertise on Kernel's team, perhaps more than any other point I made in this post, is what gives me immense confidence in its ability to succeed.

A device so good that Kernel has no direct competition

Let's talk product. Up until now, I've focused on the intangible: the vision, the mission, the talent. But Kernel has been around for some time, and those qualities have already delivered some very tangible results—Flow.

By all conceivable metrics, Kernel Flow is leaps ahead of any other non-invasive optical system out there (i.e. number of channels, head coverage, sampling frequency, ease-of-use, scalability, etc). More importantly, Kernel has carved out a unique niche within the entire spectrum of non-invasive brain measuring techniques. In contrast to other common approaches, like EEG, Flow has greater spatial resolution and access to unique information, such as the absolute concentration of oxygenated hemoglobin in the cortex. The ability to tap into this rich information—for the entire head—is one of Kernel's key competitive advantages. I do not believe that EEG, especially low channel-count systems (e.g. frontal only, in-ear, etc) will be able to provide the paradigm shift that Kernel is angling for. The only non-invasive devices that can compete with the data Flow produces are multi-million-dollar fMRI machines, which have none of the ease-of-use, portability and scalability needed to truly democratize access to these insights.

On the other end of the spectrum, invasive techniques (which require some form of surgery to insert into the brain) can offer rich information and are uniquely poised to improve the lives of people with spinal cord injury and other forms of trauma and disease of the nervous system. However, when it comes to consumer applications, invasive interfaces' current inability to capture data for the entire cortex, and their obvious barriers to entry, put them on an altogether different—and longer-term—trajectory.

It's therefore clear that Kernel operates in a very unique space. Flow strikes a careful balance: it can capture rich information about the brain while remaining portable, affordable and scalable. Nobody else can quite say that today—Kernel has invented and engineered itself out of any direct competition.

As I've said in a previous post, being on the most promising path still doesn't guarantee success—our mission may turn out to be impossible altogether. What I hope to have imparted, however, is that Kernel is currently the one company in the world best positioned to harvest the fruits of decades of neuroscience and share them widely.

The long road ahead won't be easy

Lest I be labelled overly optimistic—perhaps even naive—let me finish with this. Kernel is a startup. And a startup is still a startup: an unlikely bet taken in pursuit of a better future one believes to have glimpsed. The wilder the future, the riskier the bet. And Kernel is after a pretty wild future.

Everyone at Kernel is well aware that the road that lies ahead is littered with unknowns. There will most certainly be stumbles and a few dead-ends, perhaps even difficult decisions to make—that's the cost of dreaming big.

Kernel's mission of democratizing brain measurement hinges on finding the intersection between rigorous neuroscience, clarity and mainstream appeal. That's a tall order. But I think nobody else has a better chance—and that's an exciting position to be in.

If the 20th century had the space race, the 21st will be marked by the unravelling of the mystery that is the human mind. But unlike the conquest of space, the race to harness the power of the brain is increasingly being run by private companies, not nations. It’s exciting to witness the tremendous progress happening in the field of neurotechnologies and the broad interest bubbling up around it. While last century’s space race is plastered across the front pages of most streaming apps (e.g. For All Mankind, The Right Stuff, First Man), an equally momentous shift is happening in neuroscience right now, albeit at the sluggish pace of real-time. In the era of binge-watching, the slow trickle of scientific advances and technological breakthroughs can leave one hungry for more, just like the abridged weekly episodes of a favourite show. Good thing, then, that a new episode has aired. The latest instalment in the neurotech saga comes to us courtesy of Kernel, Bryan Johnson’s neural interface company. Let’s press play.

Kernel debuted their first-ever device, Kernel Flow, during a recent live event. Kernel plans to make the Flow available to 50 lucky early partners in the coming months. If that device name sounds familiar, that’s because Kernel had unveiled it earlier this year (which I wrote about here). As a reminder, Kernel had announced two devices, the Flow, which we’ll talk more about in a minute, and the Flux, a magnetoencephalography (MEG) headset (note: I abhor acronyms, but I think we can all agree that a word like that deserves one). Bryan Johnson says the Flux MEG system is a mere 3 to 5 months behind its more mature sibling (Flow), so expect to hear more on that front soon.

A closer look at how Kernel Flow works

The star of the show was the Flow, a time-domain near-infrared spectroscopy (TD-NIRS) system (fine, maybe a few acronyms are okay). As the name suggests, NIRS uses infrared light to measure brain activity. The advantage of using light is that it’s safe (assuming certain limits are respected) and works from outside the body—no surgery required. Specifically, a source (a laser) pressed against the head shines light into the body. The light travels through the various layers (skin, bone, brain, etc), bouncing around like so many pinballs. Some portion of the light is absorbed by the body, while some of it makes it back out after lots of bouncing around and can be measured.

The principle behind NIRS can be demonstrated with a simple experiment most people are familiar with: shining light through the hand with a flashlight. When the light crosses the body, some of it is absorbed and some of it makes it to the other side. That’s why the light looks dimmer after passing through the hand. But why is it red? The answer is that light with a shorter wavelength (towards the purple side of the rainbow) is more strongly absorbed by the body, while the longer wavelengths pass through the body more easily (the red part of the rainbow). Although we cannot see it, infrared also passes through the skin very easily, which is one of the reasons it’s used for these types of measurement. Less absorption means infrared light travels deeper into the body and brings back more valuable information.

While shining light through the hand is an example of transmittance (light goes in on one side, comes out on the other), the human head is too large for that to work. Instead, NIRS relies on reflectance, which is achieved by placing light detectors near the source (e.g. arranged in a circle 1 cm around the laser). These sensors pick up light that bounced around inside the head (was scattered) and came back up to the surface. It’s possible to observe this effect with the same flashlight we shone through our hands earlier. By pressing it against any part of the body, the area around where the flashlight touches the skin will turn red. That’s reflectance.

If NIRS works by shining light into the body and measuring how much of it is reflected, how can it detect brain activity? To answer that, we must first look at an interesting optical property of blood. A major component of blood is hemoglobin, a large protein which binds to oxygen and carries it from the lungs to the rest of the body, where the oxygen is released and used as fuel by cells. Interestingly, hemoglobin absorbs red and infrared light differently depending on whether it’s carrying oxygen or not. And a lot of the body’s absorption of that part of the spectrum comes from hemoglobin. This makes it possible to determine how much oxygenated blood is currently running through a body part by simply looking at how much red and infrared light it absorbs.

This (finally) brings us to the brain. When a region of the brain is active (e.g. because it’s involved in executing some task) the neurons and local tissue consume more oxygen (and glucose). This triggers a relatively fast and specific increase in blood flow to that region, to resupply it with oxygen and other metabolites (this process is called the hemodynamic response). Within seconds, the blood flow to an active region of the brain increases, bringing with it more oxygenated hemoglobin (and more blood volume overall) and “washing out” the deoxygenated hemoglobin. The blood supply goes back to normal within about 15 seconds. When light travels through a region of the brain undergoing this change in blood supply, more infrared light is absorbed because of the higher amount of oxygenated blood in the tissue. This change in absorption is how NIRS indirectly measures brain activity: it simply detects the increase in blood supply caused by the activation of a chunk of brain.

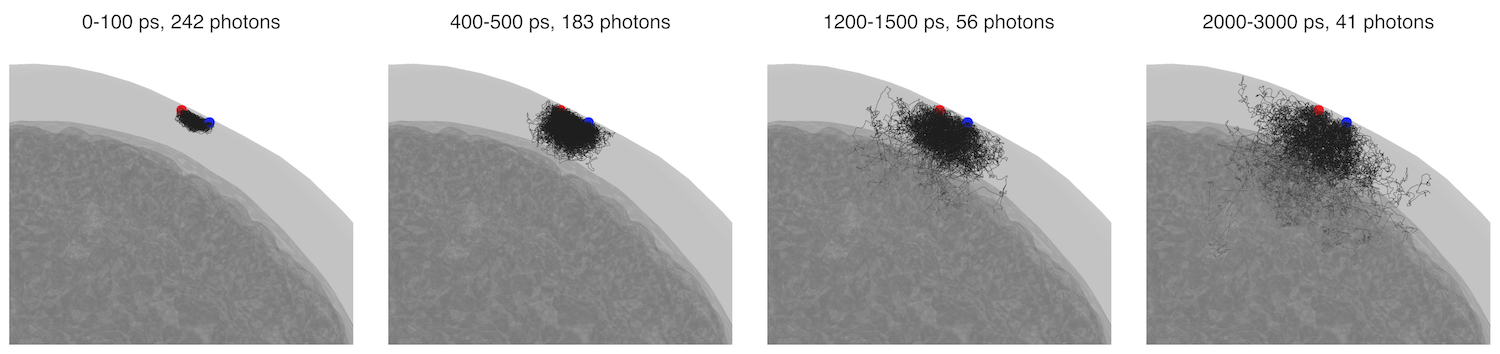

Not to go too far down the rabbit hole here, but there’s one more important piece of information about how Kernel Flow works. So far I’ve described NIRS in general terms, but I began this section by saying that Kernel Flow is a TD-NIRS device. Surely I wouldn’t have added two letters to an acronym (which you know how I feel about by now) just for fun. The TD stands for time-domain: a specific variant of NIRS which relies on extremely short pulses of light (only a few picoseconds long). Because the pulses are much shorter than the time it takes for light to bounce around the body and resurface, we can consider all of the light to have left at the same time. We can then use that assumption to estimate how long any light picked up by the detector travelled for—its time of flight—since we know both when it left the source and when it arrived at the detector. Knowing the time of flight is incredibly valuable because it allows us to “sort” the light based on how long it spent inside the body. Light that came back very quickly—and therefore probably bounced only a few times through the skin or bone and never reached the brain—mostly contains irrelevant information (artifacts). On the other hand, the light that arrived later—and therefore travelled deeper into the brain—contains valuable information about neural activity. Since this “late” light inevitably had to travel through those superficial structures before reaching the brain, we can subtract the signal obtained from the “fast” light to remove most artifacts. This makes TD-NIRS very good at extracting neural information specifically from the brain and ignoring irrelevant parts of the signal from the skin and skull. The additional depth information also helps localize neural activity more precisely within the cortex.

A simulation performed by Kernel showing the likely path (black lines) taken by light arriving at the detector (blue dot) from the source (red dot). Light arriving at the detector quickly (left panels) doesn't travel deep into the tissue, while light arriving later is more likely to have penetrated deep into the tissue (image from Kernel).

A simulation performed by Kernel showing the likely path (black lines) taken by light arriving at the detector (blue dot) from the source (red dot). Light arriving at the detector quickly (left panels) doesn't travel deep into the tissue, while light arriving later is more likely to have penetrated deep into the tissue (image from Kernel).

There’s one last advantage to TD-NIRS: using two light sources and some smart tricks allows for absolute concentrations of oxygenated and deoxygenation hemoglobin to be measured, instead of just relative changes. We’ll touch on this last bit some more later.

Putting Kernel Flow into context

To understand what Kernel is up against, it’s important to know that despite being available for a long time, NIRS has failed to gain widespread adoption as a tool for neuroscientific research (studying the brains of infants and children being perhaps the only exception, mainly because of how safe and robust to movement NIRS is). It has been steadily growing in popularity but remains a relatively niche brain recording method. While some researcher might push back against this characterization, it’s safe to say that NIRS has not reached as wide of an audience as some of the other non-invasive brain-recording approaches. The main reason for this is that NIRS is often seen as slow, big, imprecise and expensive. Not the best list of adjectives for a brain-computer interface, especially not one that ought to eventually find its way into people’s homes. Kernel is out to change that perception.

When Kernel began looking at this problem about five years ago, they started with a clean slate. They looked at everything under the sun—every possible way to peer into the brain. “We looked at literally everything,” Bryan Johnson tells me. Having quickly concluded that invasive technologies were not going to allow for the rapid democratization of brain interfaces that Kernel wanted to pursue, the team eventually settled on NIRS (and MEG) as the two most promising modalities. They saw great potential in these two somewhat neglected methods for measuring brain activity. In both cases, Kernel foresaw they could tackle many of the challenges preventing wider adoption of these devices, which were mostly engineering, rather than scientific, in nature (i.e. current devices are large, expensive, etc).

Their work clearly paid off. Kernel Flow blows all current TD-NIRS systems out of the water. For instance, a common limitation for NIRS systems is how quickly they can react to incoming light, which is usually measured in photons per second. The best research systems described in the literature reach up to a few tens of millions of photons per second (see here and here for examples). Kernel’s design has already demonstrated 800 million photons per second, with plans to reach above a billion—two orders of magnitude more than the state-of-the-art. When compared to existing commercial devices, the difference is even larger. The Kernel Flow is also a very flexible solution: by relying on a smart modular design, the headgear can be configured with a single sensor or up to 52 for full head coverage. Kernel has yet to announce any pricing, but the Flow is expected to cost an order of magnitude less than comparable devices. While the initial cost is unlikely to put the device in the range of a Christmas gift quite yet, it will be a compelling entry point for researchers, considering what the device offers.

Between the significant technological advances and the benefits inherent to TD-NIRS, Kernel hopes to have pushed the state-of-the-art far enough to unlock a whole new wave of interesting use-cases. Which brings up the following important question.

What exactly are you supposed to do with a TD-NIRS device at home?

The Kernel Flow will undoubtedly offer researchers already working with NIRS a powerful new tool. It will most likely also convince some researchers currently using other brain-measuring devices to make the switch to NIRS. But selling devices to scientists isn’t Kernel’s long term plan. They want to bring neuroscience to the masses. This raises the crucial question: what does one do with a TD-NIRS device at home?

The answer, according to Bryan Johnson, is that they don’t know yet. While this might sound surprising at first, it is the most honest answer he could give. Nobody knows all the ways a product that doesn’t exist yet might be used, especially if that product is a platform for others to build on. The reason that Kernel is creating the tool in the first place is the belief, shared by many in the blossoming neurotech industry, that the use cases will come—once the devices are built. Judging by the backlash Neuralink (another neurotechnology company) received from the scientific community for promising the sun and the moon, one can see why the cautious approach might be more judicious for Kernel.

“Reducing friction,” is what Bryan Johnson tells me Kernel is about. And he has experience doing just that: his previous company, Braintree (which later bought the payment app Venmo), focused on fast, simple and frictionless monetary transactions, to great effect (Braintree was purchased by PayPal for $800 million). The hope is that by building a best-in-class device at an approachable price, the applications will build themselves. At first, Kernel plans to make the Flow available to a wide range of early-adopters interested in exploring the device’s capabilities (neuroscientists, entertainment, gaming and pharmaceutical companies, etc). Once clear and compelling use-cases emerge, Kernel will start distributing the device to consumers.

While Bryan Johnson deliberately withholds any strong opinions about specific “killer apps,” he has some ideas about the broad types of applications Kernel Flow might unlock in the near future. Quantification, he says, is the name of the game. By listening in on the neural symphony reverberating through our brains at any given moment, it might be possible to quantify abstract and often ill-defined concepts, like focus, cognitive load, aging, mental health, pain and a slew of others. This concept of quantification is bolstered by the observation that we humans are remarkably bad at perceiving the world as it really is, especially our own blind spots and biases. Putting numbers to otherwise subjective experiences might bring forth a new era of neuro-quantification and shared understanding, something Bryan Johnson explored in a 2018 post (an interesting read).

At least that’s the vision. In the more immediate future, quantifying brain activity might enable applications more limited in scope, like quantifying “cognitive performance” as it changes throughout the day or based on environmental factors. How did getting only a few hours of sleep last night impact my cognitive performance? What about that heavy meal or long walk? The same idea could be applied to several common activities and situations such as meditation, trying to focus on a task or learning. In the era of Fitbits, Apple Watches and smart scales, one can envision consumers having an appetite for something like that. But it’s hard to imagine how these examples alone could lead to the type of mass adoption Bryan Johnson pictures. After all, Fitbits have a nasty habit of finding their way into the forgotten gadgets drawer.

Another of the Kernel Flow’s strengths, stemming from the TD-NIRS technology it’s based on, is the ability to measure absolute concentrations of oxygenated and deoxygenated hemoglobin. This seemingly small detail enables robust comparisons between measures obtained in different people, different brain regions in a single person or even the same region over time. This is a key advantage over competing recording techniques and might enable interesting new use cases, including health-related ones (prevention, early disease markers, etc). However, as Kernel is well aware, stepping into the medical realm comes with additional delays and countless regulatory hurdles, which can slow down progress and blow up costs. That’s probably why Kernel says they have no immediate plans to seek regulatory approval for their device—but are likely to do so down the road, especially if there is a clear clinical application within reach.

You may have noticed that the list of applications does not include controlling things with your mind, something Neuralink is actively pursuing. Surely using your mind to play video games or control your computer constitute interesting use-cases? The reason those applications aren’t part of the discussion is that NIRS is fundamentally too slow for controlling most things with your mind, where the delay between intention and action needs to be less than about a tenth of a second. As we explored in detail above, NIRS measures an indirect effect of brain activity: changes in blood oxygenation and volume. The biological phenomena that cause these measurable blood changes take time—that’s a fairly inescapable biological reality. It’s like finding out if someone is hungry by waiting for them to eat—you’ll only be able to tell after the fact. Nonetheless, Bryan Johnson believes the naysayers are too quick to dismiss NIRS as fundamentally slow. And there certainly may be ways to speed things up by using smart tricks, especially with a fast device like the Flow. But despite these hypothetical speed gains, it’s doubtful that NIRS could be used for fast-paced input, like playing an online video game. Of course, NIRS would be a good candidate for less time-sensitive control tasks, like turning the lights on. However, as Bryan Johnson points out, direct motor control is unlikely to be the most exciting consumer application for non-invasive brain interfaces. Instead, measuring the state of the brain could make for more interesting scenarios—for instance, rather than simply switching the lights on and off, one might envision a system that automatically adapts the lighting in the room to optimize your focus or alertness.

Could Kernel really get a brain-computer interface in every US home by 2033?

The current Kernel Flow pricing starts at five thousand dollars for a basic configuration. Bryan Johnson estimates that the entry price could drop to as little as a few hundred dollars once it’s produced at high enough volumes. That would put the device well within reach of consumers. And if future iterations bring that price down even further, one can start to see where the 2033 figure comes from.

Given the constraints of a non-invasive device, I would argue that Kernel is doing everything right. They’ve chosen a realistic and unique path, and they’re pouring resources into building a best-in-class device. They’re approaching the broader question of consumer applications with candour, restraint and an open mind. But doing everything right does not guarantee success, and the biggest question mark remains use-cases. It seems to me like quantifying brain performance will not be quite enough to get a majority of people excited. It may be a viable business, sure, but not a device-in-every-home kind of deal.

There are countless cautionary tales of exquisitely engineered technologies failing to convince consumers. Magic Leap and Microsoft built groundbreaking augmented reality headsets, far surpassing what Google glass could do, yet nobody knows quite what to do with them. Or 3D printing, which was lauded as a solution to planned obsolescence and would have people printing their own forks at home by now, yet has so far only found a foothold in specific niches (certainly not as a widespread consumer device).

When I mention Magic Leap, Bryan Johnson answers that unlike augmented reality headsets, a brain-measuring device has inherent value that goes beyond interactive applications and experiences. Accessing the information hidden inside your skull, he argues, is intrinsically valuable—and therefore worth the effort. Even without compelling “entertainment” options, the device might still find other uses, such as providing medically relevant information or supporting positive life changes (better learning, higher focus, etc). While that may be true, I would caution that a functional brain imaging system, like the Flow, provides the most useful information when used within a specific and well-defined context. Just looking at a brain without knowing what it’s doing isn’t typically of much value. Users will presumably need to wear the device while engaged in specific tasks—and will therefore need a good reason to do so. This is not to say that there is no viable path for consumer adoption—but rather that the path has yet to be discovered. What we can say, based on the device’s performance compared to other non-invasive solutions, is that whatever that killer-app ends up being—it will likely run on Kernel’s Flow.

Bryan Johnson spoke of an inflection point, a moment where a particular technology reaches the threshold of “compelling-enough,” starting to make money, unlocking further technological advances and sales in a runaway virtuous cycle. He believes the first iteration of the Flow might push Kernel past that point and put them on an exponential curve of progress and profit. But it’s also possible that their first device, despite its merits, will fall just shy of that threshold. We’ll find out for sure in an upcoming episode. For now, as is regrettably too often the case, we are left with a cliffhanger.

A neuroscientist’s thoughts on Neuralink's announcement

September 2, 2020

One of the most anticipated general public neurotech events of the year happened last Friday: Neuralink’s second yearly update. Although last week was a particularly eventful one for neuroscience, with major announcements from multiple key players in the field, Elon Musk commands a level of attention unlike anyone else (warranted or not). So today we’ll focus on Neuralink and the technology they unveiled.

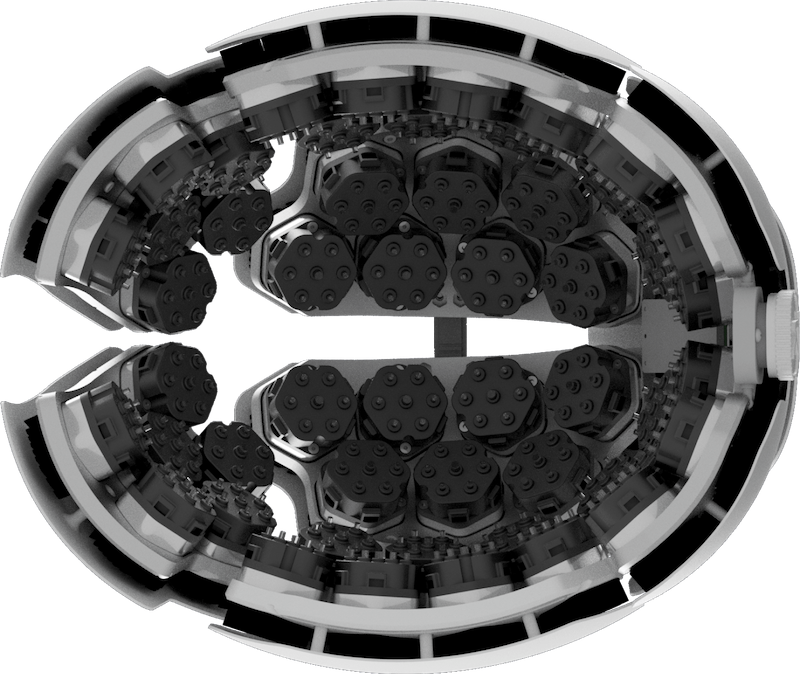

The Neuralink system

The system Neuralink presented during Friday's event is an evolution of the system they unveiled a year ago. Instead of implanting a single array of rigid electrodes into the brain, as is more common, their system inserts individual threads using a device reminiscent of a sewing machine. Indeed, a significant share of their efforts has been geared towards building a medical robot (i.e. the sewing machine) capable of quickly and precisely inserting the soft and flexible electrode threads into the brain. Individual threads are then connected to a custom chip that filters and compresses the recorded neural data, before sending it out through a low energy Bluetooth connection to a smartphone. A single chip can connect to around fifty to a hundred threads, each with multiple independent contacts, for a total of a thousand individual recording (and stimulation) sites. One of the major changes in the newly unveiled “second generation” device is that the entire system fits inside a capsule about the diameter of a quarter dollar coin, albeit five times thicker (2.3cm in diameter and 0.8cm thick). This package is meant to replace the piece of skull removed during surgery. In other words, a coin-sized hole is drilled into the skull, the electrodes are inserted into the brain using a robotic sewing machine, and then the hole in the bone is plugged with the device rather than the removed piece of skull. This allows for an elegant implant with limited wiring and no transcutaneous connectors. Of note, the surgical robot has also been significantly streamlined and improved, presumably moving it closer to something that could be used in an operating room and on humans. However, details were scarce, making it hard to evaluate any of these changes accurately. The short version is that the technology was very much in line with what had been shown last year, only improved, as can be expected of a company iterating towards a commercial product.

A presentation light on details

After their presentation last year, Neuralink received considerable backlash from the scientific community for excessive hype and failing to recognise similar existing technologies. While hype was still available in spades this year, there was in my view an effort to somewhat scale down ambitions. For example, Neuralink’s mission was stated in much more concrete terms this year: "The purpose of Neuralink is to solve important brain and spine problems." While there had certainly been talk of neurological disorders last year too, they had been overshadowed by very aspirational and futuristic mission statements within the first 5 minutes of the talk, including the distant idea of healthy people using brain interfaces to "achieve a symbiosis with artificial intelligence”. These ideas still made it onto the stage this year, but only later in the event during the speculative part of the Q&A session. Similarly, engineering efforts have been refocused and concretised. Last year’s plan of implanting 4000 channels in their first human participant appears to have been scaled down to a single 1024 channel device. This brings them much closer to the roughly 250 channels currently implanted in human participants enrolled in research studies, including at the University of Pittsburgh where I currently work.

While some neuroscientists are allergic to the hype and the grandiose statements Elon Musk is known for, it’s important to remember that Neuralink isn’t a research lab—it's a Silicon Valley startup. Their event had over a hundred thousand live streamers (despite being the middle of the night in Europe), and the video has since amassed over 2 million views. That’s a big reach—this was no scientific conference talk. A startup’s CEO needs to straddle the line between realistic short term goals and an overarching mesmerising vision to recruit talent and raise money. It’s the same duality that leads to sharing images of towns on Mars (perhaps even with a human-made atmosphere) all while building the world’s most advanced rockets. In Neuralink’s case, there is the added ethical danger of presenting treatments that clearly won't exist for decades, raising false hopes in people afflicted by neurological disorders. However, by offering no explicit timelines or plans, Neuralink is making no promises, only engaging in long term speculation. The same way we can trust people not to sell their homes in preparation for a move to Mars, we should trust them not to take dramatic health decisions based on 20 minutes of sci-fi thought experiments and speculation.

Neuralink’s scientific and engineering merits ought to be judged on the systems it produces, not its storytelling. That is what I will try to do here. Since there is simply not enough information to make a thorough evaluation—educated guesses will have to do.

Neuralink’s strength is not science, it’s engineering

Despite the aforementioned lack of details, a few laudable achievements were on display at Friday's event. The biggest was a demonstration that Neuralink's new device (Neuralink’s Neuralink?) works in vivo (in pigs), and did so for two months. The ability to wirelessly stream from 1024 cortical channels in a freely behaving animal is a big step in the right direction and a demonstration of what money and a talented team can deliver. The same is true of another extremely well-funded neurotechnology startup: Kernel, as I discussed after their last announcement. In both cases, the real breakthrough is the ability to take existing prototypes from research labs and integrate them into a well designed and engineered product. The robot and implantable system Neuralink showcased are more polished than anything currently available, even if none of the individual ideas or components is completely novel. At the end of the day, execution is what matters. The history of technology is littered with examples of companies successfully combining existing ideas and prototypes into compelling products and popularising them (e.g. Apple’s first successful point and click user interface, which borrowed from Xerox's PARC in-house prototypes).

The biggest challenges still lie ahead

While the engineering progress is impressive, where Neuralink still needs to do some convincing is on the science. What we have seen so far is excellent execution of things we knew could be done (miniaturisation, wireless system, surgical robot, etc). The next steps for Neuralink will increasingly involve tackling unsolved scientific challenges, which are an entirely different flavour of problem. Science is much harder to rush, it's less amenable to a startup’s timeline. For instance, Neuralink currently uses thin-film based electrodes, which have yet to demonstrate long term viability in academic labs (where they are extensively studied and have been for some time). Thin films have interesting properties such as very small sizes, high mechanical flexibility, and well-established fabrication processes, which make them well suited for brain implants. But their long term stability remains a looming challenge. So far Neuralink has demonstrated their electrodes can work for 2 months in pigs. That’s a long shot from showing they can work for five or ten years in humans. As Matt Angle, the CEO of another high profile neurotech startup called Paradromics, recently put it: “if you want to test whether something can last 10 years, you really have to wait 10 years.”

The other big challenge is what to do with a thousand channels once they are implanted and stable. Many of the applications mentioned in the presentation are plausible and actively researched (e.g. stimulation of visual cortex to restore sight, stimulation of the somatosensory cortex to restore touch, etc). But each of these is an active area of research full of unanswered questions. Having a thousand channels instead of a few hundred will not significantly change the game. For instance, we know that electrically stimulating any of these sensory areas can elicit corresponding percepts (e.g. localised flashes of light when stimulating the visual cortex). But these sensations are often crude replicas of their natural counterparts, with limited spatial resolution, unnatural qualities and limited dynamic range. While one can extrapolate from these results and imagine a future where improved artificial sensations become practical and useful, it will take a lot more than increasing the number of available channels by one or even two orders of magnitude.

Talking only about stimulation is perhaps a little unfair, since it's the less advanced side of the neural interface coin. Recording information from the brain is much better understood, especially for motor areas, as illustrated by this study from the University of Pittsburgh where a brain implant is used to control a robot with 10 degrees of freedom. Neuralink's first demonstration will likely be to help a paralysed person control a computer with their mind, or perhaps even a robotic device of some kind (this second one is less likely because of the added complexity). Concretely, a system like this could allow the user to interact with a computer by controlling a cursor (i.e. point and click) and input text (either by using a virtual keyboard, or perhaps a more cutting-edge approach like the one I discussed in a recent post). On top of that, the user could control a few additional virtual “buttons”. In other words, the first Neuralink product could be a virtual brain-steered controller, with a joystick, three or four buttons and a speech to text feature. While these are all things that are done routinely in research labs (see for instance Nathan, who is part of a study at the University of Pittsburgh, playing Final Fantasy with his brain implant), providing this functionality in a simple, user-friendly, take-home device with a safe surgical procedure would likely be a useful product for severely paralysed people.

To conclude, Neuralink has integrated some of the best ideas and prototypes from academia into a very polished and elegant cortical implant solution—they’ve built a fine ship, probably the best ship out there (certainly has a lot of sails). But they are now about to embark on a journey through unmapped and treacherous waters. Let's hope they don’t get lost.

This article originally appeared on Psychology Today

The promises of modern neurotechnology are plentiful, but perhaps none are quite as enticing as the prospect of using brain-computer interfaces for high-speed communication. Efficiently turning thoughts into words could help people with neurological disorders interact more seamlessly with the world around them, and in a more distant future, enable anyone to reach new levels of symbiosis with our increasingly omnipresent digital realities.

Unfortunately, even the most advanced neural implants available today, coupled with state-of-the-art algorithms, cannot reach anywhere near the interaction bandwidth achieved by a healthy pair of hands. A striking example of this shortcoming is text input. An average adult typing on a keyboard produces about 40 words per minute (exact values are hard to come by and depend on a very large number of factors, but this is a fair estimate). On a phone and with two thumbs, people are slightly slower, with an average of 36 words per minute (Palin et al., 2019). Interestingly, younger age groups (i.e. digital natives who have used smartphones from toddlerhood) achieve close to 40 words per minute, comparable to keyboard users.

Far from these speeds, the best invasive brain-computer interfaces to date only achieve about 8 words per minute. There’s clearly a long way to go. While there are multiple reasons for this stark difference, one of them is how typing happens with a brain-computer interface. Typically, a user will move a cursor on a screen (a mind-controlled computer mouse if you will) and use it to click on a virtual keyboard. This is similar to the painfully inefficient experience of entering text on a TV with a remote control: a single letter at a time. One can easily see why this leads to low typing speeds. To be clear, even a slow interface can be beneficial, especially for people with severe motor disabilities who have a hard time communicating or interacting with a digital device. Still, improving communication bandwidth is a major goal for brain-computer interfaces, with far-reaching clinical implications.

A new approach, described in a recently released pre-print article from Prof. Krishna Shenoy’s lab at Stanford, proposes to dramatically improve the experience of brain-controlled writing (Willett et al., 2020). The fundamental idea is simple: What if instead of controlling a cursor to click on virtual keys, one simply imagined writing letters with a pen?

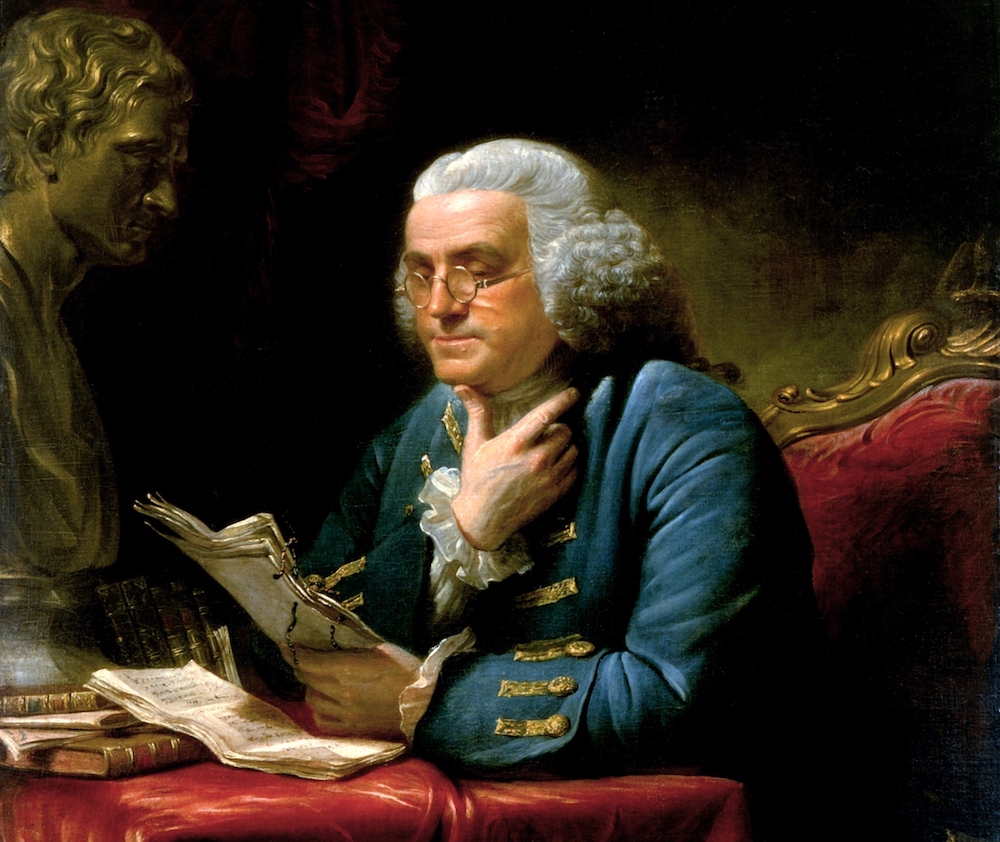

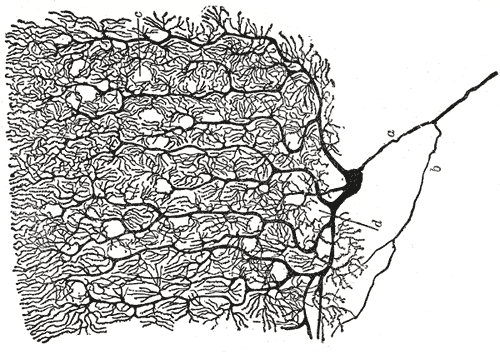

A brain microelectrode array.

A brain microelectrode array.

To achieve this, the study participant, who suffered a spinal cord injury, had two arrays of electrodes inserted in the area of his brain responsible for moving his right hand. Each of these arrays can be thought of as a “bed of nails” with about a hundred individual shafts (i.e. “nails") penetrating the brain (see picture). The activity of a handful of neurons close to the tip of each of these “nails" can be measured, allowing researchers to eavesdrop on the complex “neural symphony" of this small region of the brain. By listening in on the neural activity while the participant imagined writing letters with a pen for several hours, the authors were able to train a machine-learning algorithm to predict what letter the participant was trying to write based on its “neural signature.” Once the algorithm was trained, it could print letters on the screen almost as soon as the participant imagined writing them.

Using this new approach, the study participant was able to input text at a rate of just under 20 words per minute, about double what was previously possible with this type of brain implant. Importantly, this impressive gain in speed came without any changes to the implant. It was simply the result of a smarter strategy and better algorithms. One of the reasons this new technique performed better is the significantly reduced reliance on precision. Clicking an arbitrarily small virtual key requires accuracy. The smaller the key, the more precise (and slow) the pointing needs to be. But make the keys larger and the distance between them grows, negating much of the gains with increased travel times. Instead, when writing a letter with an (imagined) pen, the entire trajectory matters, not just the endpoint, relaxing the constraints on precision and speed.

While impressive, these results still fall short of average typing speeds attained with keyboards and smartphones, and about 5% of characters were misidentified by the software. This highlights the long road these systems still have to go before they can match physical interfaces.

Nevertheless, that “simple” changes in strategy can improve performance from 8 to 20 words per minute is a very promising sign. It hints at the possibility of using new paradigms to overcome the limited bandwidth of modern brain-computer user interfaces. Given that handwriting is typically limited to about 20 to 30 words per minute (Hardcastle et al. 1991, Burger et al. 2011), it’s likely that future gains in speed will require even more creative solutions, such as using stenography instead of longhand, or abstract interaction schemes that bypass the “physical" constraints of a virtual keyboard or pen.

References

Palin, Kseniia, Anna Maria Feit, Sunjun Kim, Per Ola Kristensson, and Antti Oulasvirta. "How do people type on mobile devices? Observations from a study with 37,000 volunteers." In Proceedings of the 21st International Conference on Human-Computer Interaction with Mobile Devices and Services, pp. 1-12. 2019.

Willett, Francis R., Donald T. Avansino, Leigh R. Hochberg, Jaimie M. Henderson, and Krishna V. Shenoy. "High-performance brain-to-text communication via imagined handwriting." bioRxiv (2020)

Hardcastle, R. A., and C. J. Matthews. "Speed of writing." Journal of the Forensic Science Society 31, no. 1 (1991): 21-29

Burger, Donné Kelly, and Annie McCluskey. "Australian norms for handwriting speed in healthy adults aged 60–99 years." Australian occupational therapy journal 58, no. 5 (2011): 355-363.

Kernel, the neurotech company founded by Bryan Johnson, just released a wave of new information about the technology they have been building over the past half-decade. With this announcement, we finally get a glimpse into the secretive company’s plans. We knew that they had ditched their intentions of pursuing invasive brain recording techniques, leaving that all to Neuralink, a similarly minded effort launched by serial entrepreneur Elon Musk. There were rumours of a NIRS (near-infrared spectroscopy) based system, but nothing concrete. Well here we are, so let’s get to it and unwrap some of the information revealed today.

The system(s)

The vision Kernel outlined in their post is that of a multimodal recording system based on MEG (magnetoencephalography, a mouthful) and NIRS (near-infrared spectroscopy). Both of these technologies are already available and widely used in research settings (and clinical settings too in the case of MEG). What’s new here is the package, miniaturization and ease of use. Far from trivial, these advances could allow both recordings modalities to move out of the lab and into more “ecological” settings (e.g. use during movement, daily activities, etc). If Kernel developed a robust system which successfully deals with the many notoriously tricky drawbacks of both NIRS and MEG, this would be an important contribution to the field, helping democratize these two underused techniques. The general thinking is that MEG offers something similar to EEG (high temporal resolution, limited ability to localize signal), only with better overall signal quality (at least theoretically, we haven’t seen any raw data), while NIRS offers a portable equivalent of fMRI (functional magnetic resonance imaging), at the price of reduced access to deeper brain structures and lower spatial resolution.

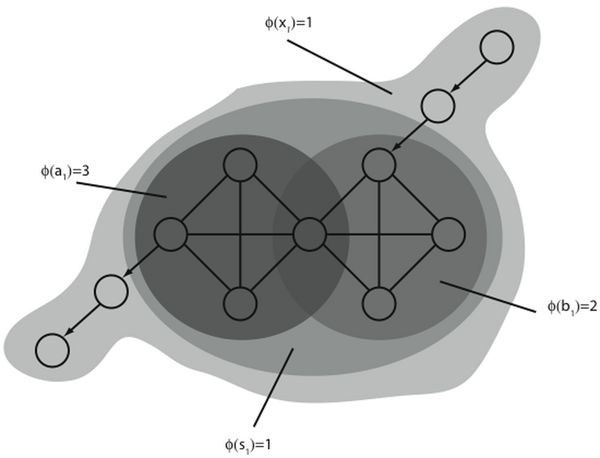

Although both modalities were already in use in research laboratories, Kernel’s contribution here is twofold: they tackled some of the key limitations of these techniques, and they produced a more polished and miniaturized version of each. The most exciting advance is the active magnetic shielding in their MEG system which, according to their post, allows them to acquire signals outside of a shielded room (active shielding has been proposed in the past, but not to the point of bringing the system outside shielded rooms, such as here and here). However, the data they report (more on that later) was acquired inside a shielded room ("All experimentation took place inside a magnetically-shielded room to attenuate environmental noise."), which raises the question of how well the system works in practice, and what the hit to signal quality might be in more realistic everyday environments. Still, this would be a big advance, dramatically increasing the scenarios in which MEG could be applied. Another important point is that the MEG system developed by Kernel has a (comparatively) small size. This is possible because they use optically pumped magnetometer (OPM) sensors, which allow for much smaller footprints than the more traditional MEG devices which require extremely low temperatures for their superconducting sensors to work, and therefore very bulky and immobile systems. OPM sensors have been used to build small systems in research settings (see here and here for examples), but nothing quite as polished and with such a high channel count as what Kernel unveiled.

Both the MEG and NIRS systems (which for now are two separate helmets) weigh in at roughly 1.5kg each, which is a lot to be carrying on the head. Having worked at a company intent on placing computers on people’s heads (Magic Leap, which produces glasses that weigh 260g), I can safely say that those weights would not work for any kind of prolonged use and would likely cause musculoskeletal issues in the long run (even the “heavy" Microsoft Hololens comes in at only 566g). Which brings us to the next point: these are obviously not consumer devices meant to be worn for hours on end, or even assistive devices for people with sensorimotor disorders (and to be clear, they don’t claim to be). These are research devices, to be used for neuroscience studies. This raises an important question about a company like Kernel: if their ultimate goal is to pursue general consumer applications, which are far on the horizon, what will sustain them in the shorter term? Kernel may have an answer in “neuroscience as a service."

The compelling (albeit tricky) vision of neuroscience as a service

Neuroscience as a service (coined NaaS by Kernel) is the idea of offering companies the ability to run state-of-the-art neuroscience studies by leveraging Kernel’s in house expertise and technology. The idea is simple and compelling, and one can easily imagine this creating a lot of interest from companies working in UX, learning, rehabilitation and more. Many (if not most) companies do not have the capacity and know-how to perform neuroscience studies to guide their product design and development. Yet many could benefit from these types of studies. Our neuroscientific knowledge may be too limited to learn anything of value in most industries, but it certainly seems plausible that this could work for a subset of companies and use-cases.

Nonetheless, a proposal like this ought to (and certainly will) raise a number of red flags in any neuroscientist’s mind. With our limited knowledge about the brain, and being able to access but a small percentage of ongoing activity with non-invasive sensors, things can quickly veer into pseudoscience. In my view, this is one of the biggest risks Kernel faces with NaaS. It’s easy to envision how a number of companies with little to no interest in rigorous neuroscience may try to add some trendy neurotech spice to their marketing campaigns (I can already hear the taglines: "this is your brain on X”). Luckily, offering this service on their own terms allows Kernel to tackle this issue however they please. But market forces may conspire against them, pressuring them to strike a difficult balance between scientific rigour and profits (i.e. smaller studies will have less power, but will also be cheaper, etc).

A few words about the two demo applications

Together with a description of their system, Kernel also released two demo “studies” serving to establish the company’s scientific and technical prowess. Although somewhat expected of a company trying to generate hype, the wording of their announcements significantly oversells what they achieved in these experiments and how it relates to previous work. Both experiments show nice results and support the quality of their system, but neither of them is groundbreaking. Unlike the headlines, the full-length article is more transparent about the significance of these two studies.

In one experiment, which they call Sound ID, Kernel scientists extended a previously published study. Their headline reads: "Kernel Sound ID decodes your brain activity and within seconds identifies what speech or song you are hearing.” In practice, the experiment works as follows: a person is made to listen to ten predefined and preprocessed song or speech excerpts, and the system will guess which of the ten auditory fragments the person is listening to based on the MEG signal (after tens of seconds). Importantly, this requires knowledge about the piece being listened to. Additionally, this approach would most likely degrade very quickly as one started adding more snippets to it (e.g. recognizing one out of a hundred songs the user might be listening to, etc). This is certainly an interesting illustrative example but is unlikely to be practically useful in this form. Although not from Kernel directly, suggesting this is a “Shazam for the mind” is definitely deep in hype territory. On the other hand, an interesting application of this technique which came up in my research is the ability to detect which auditory stream a person is attending to, for example when multiple people are talking at the same time. It’s easy to envision how this could be helpful in studies on attention as part of Kernel’s NaaS offering.

The second experiment was a more standard speller scenario, where a person can (slowly) type by visually attending letters on a virtual keyboard. This relies on a (widespread) smart trick: each virtual key on the screen flickers with a specific pattern, which is then reflected in the brain when a person looks at it. This way, it’s possible for the system to predict which key the person is looking at by recognizing the flickering pattern. This type of approach works well, and can allow people with motor impairments to communicate (e.g. someone with paralysis after spinal cord injury). What’s intriguing about this choice of experiment is that it highlights one of the main advantages of invasive neural interfaces (of the type Neuralink is pursuing). Specifically, the speller trick requires the person to “type” with their eyes, which is not a very fast and efficient way to enter text (the information transfer rate Kernel found was around 0.9 bit/s). On the other hand, invasive interfaces have been shown to allow speech synthesis directly from neural recordings (see here for another example), potentially enabling a “direct brain Siri” type of interface. Neither of these papers specifically look at information transfer rates, but they should be close to that of natural speech, which some estimates put at 40 bit/s. This highlights the dramatic difference between the two approaches. So while there is nothing wrong with this “speller” demonstration, it’s an odd choice, because it happens to be one of the areas where invasive interfaces have an objective, well-quantified performance advantage over non-invasive alternatives.

Final thoughts

It’s exciting to live in a time where neurotechnology receives so much attention and funding. One of the things extremely well-funded companies can achieve that researchers cannot (and have little incentive to), is building integrated and polished systems. Both the Neuralink and Kernel announcements have that in common: they take state-of-the-art research technologies and turn them into actual products. That’s no small feat and requires a lot of resources. In both cases, the resulting systems are many researcher’s dreams come true, offering signal quality, reliability and ease-of-use well beyond what clunky, semi-custom lab rigs can offer. Although researchers certainly cannot complain, one wonders whether such monumental efforts make financial sense. These companies share a common vision of a future where we interact with our digital tools in more efficient and seamless ways, using our brains directly. But there remains a long and windy road before this vision can become a reality for everyday consumers, and how easily these companies can sustain themselves until that day is still to be seen. Getting there will require more than streamlined and optimized versions of tools researchers already have. The science isn’t quite there yet, and some fundamental breakthroughs will need to happen before many of the promised sci-fi sounding application can see the light of day. The fancy tools Kernel and Neuralink are building will help get us there faster. Here’s to hoping they’ll have the patience to stick around and continue building them while Science meanders along its slow and steady path to the future.

Keeping a daily diary is an oft-touted habit, the benefits of which are promoted as seemingly boundless. Although much of it can easily be dismissed as hyperbole or click-bait, my personal experience in the matter has transformed me from a cautious skeptic into an enthusiastic convert. Here is what I’ve learned after keeping a daily diary for a thousand days (and counting).

It helps build self-accountability

Not being able to keep oneself accountable is arguably the major source of wasted dreams and failed ambitions. It’s what keeps many of us from achieving the goals we set out for ourselves. One of the culprits is the lazy part of our brain (what Tim Urban’s calls the instant gratification monkey), which is constantly trying to jeopardise our plans by pursuing instant gratification. Unfortunately, many of the things the long-term planners in us want are things which require tedious work in the now, and only provide their rewards in the vague then. The only way to achieve those things is to put in the necessary effort. But with a mischievous monkey sitting in our brains, it’s easy to just give up, procrastinate, just this one time, just for today. With no deadlines, no accountability, there really aren't any negative consequences for skimping out on the hard parts. It’s only looking back that one may regret not achieving the things they had set out to do, and by then it may be too late.

That’s where the diary comes in. It's certainly not perfect, and it certainly won’t defeat the "procrastination monkey" on its own, but it’s a start. The reason it helps is that by sitting down and writing about your day, you are helping keep yourself accountable. You are effectively forcing yourself to stop and think for a moment, to put things into perspective, evaluate them in retrospect. If you’ve been using your time in ways you had not planned to, keeping a diary helps you realise you've been cheating yourself. To put it simply, without a diary, pursuing instant gratification may not lead to anything bad — life just goes on and your temporary qualms get brushed aside. But with a diary you get to disappoint yourself again and again, every day. This pleasant exercise forces you to face the truth and hopefully gives you the drive to look for solutions.

It’s a great tool to shoe-horn other habits into

Keeping a diary every day is a habit one needs to develop, and like most (good) habits, getting it to become second-nature takes time and discipline. There’s a high chance that you will stop early on, when it’s hardest to keep going (like those gym memberships that just sit there from February onwards). But if you successfully reach the point where daily logs are simply part of your life, congratulations, you’ve unlocked a very powerful tool for self-improvement.

The reason it’s such a powerful tool is simple. It’s easy to use an established daily diary routine to boost other would-be habits. For instance, if you wanted to work on your novel daily (say, by writing a thousand words), you could simply add a “novel word counter” entry to your diary where you record how many words you managed to put down every day. By doing so, you are using your existing, established routine to force yourself to think about your other goals every day. It’s easier to build a habit on top of an existing habit than it is to build a habit from scratch.

You might just get to know yourself a little better (and fight back your inner biases)

What you write in your diary will depend on what you plan to use it for. But whatever you do write, it’s likely to involve crystallising some of the thoughts running around your mind into concrete written sentences. The simple step of forcing yourself to put words onto your thoughts helps you understand them better. This is the well known power of introspection.

Behavioural neuroscience has established we are excellent "confabulators", making up stories to justify our actions and thoughts. We are also essentially blind to the world around us, experiencing only a small part of it, and making up the rest. In other words, we paint a picture of the world tainted by our specific biases and beliefs. Considering these limitations, it’s easy to see how a decision we took a while back might suddenly seem like a strange move under a new light (or simply in a different state of mind). The power granted by a diary to read through your own thought processes provides invaluable insight into your own beliefs, biases and thought patterns.

Of course, a diary is no magic wand. It may help shed some light on your mind’s shortcomings. Overcoming them, however, requires intellectual honesty and a healthy dose of self-criticism, assuming it can be done at all. The existence of (and fight against) our inner biases is something Bryan Johnson explores in great detail in his blog post, which is worth a read.

Offloading memories and creating traceability

Finally, a more tangible and practical benefit of keeping a daily diary is the fact that anything you write down will be searchable (if your diary is in digital form) and available for future reference. Depending on the level of detail that goes into your daily scribbles, you diary could help you remember anything from important events to mundane details. Remembering when you took that holiday to the sea may only be good for proving your spouse wrong (disclaimer: I do not recommend ever proving your spouse wrong), but knowing what your boss told you about that project which seemed irrelevant at the time but is now crucial to your career could be a life-saver.

We established that our brains are full of biases and don’t really see the world the way it is. The same hold true for our memories. They are a mere shadow of past events, deformed by time, filtered by our perception and emotions. Having a reliable account of important events is an objectively useful aspect of keeping a diary, and comes in handy more often than one might assume.

Keeping a daily diary may not be the most ambitious goal you decide to pursue, but it might just end up impacting your life in more ways than you anticipated. It’s a low investment high return kind of deal. So get writing.

A few days ago, Elon Musk’s latest venture was finally revealed after months of dropped hints and speculation. The new company, Neuralink, will presumably pursue the futuristic sounding goal of intelligence amplification (IA), joining Kernel, a slightly older effort with analogous goals launched by Bryan Johnson. Practically, these companies will work on developing new techniques to link the human brain to computers. Although many such brain-machine interfaces (BMIs or BCIs) exist today, none of the current systems are capable of precise, large scale recording of the entire brain over long periods of time, while at the same time minimising the risks associated with the initial insertion procedure (ideally forgoing the need to crack the skull open). Clearly, in order to take any meaningful steps towards intelligence amplification, both companies will initially have to focus their efforts on creating a new neural interface, one allowing for a high-bandwidth communication channel between brains and computers.

Fittingly, these efforts arrive at a time where the field of brain-computer interfaces is ripe for momentous achievements. In recent years, following the clinical success of implantable BCI systems in humans (great examples can be found here and here), a multitude of new and promising designs for neural interfaces have been proposed. These novel designs aim to overcome the technical challenges of current generation systems, such as deleterious immune responses, tissue damage and chronic stability issues. Kernel and Neuralink are likely to be pursuing ideas which are extensions of the current state-of-the-art, possibly working on several promising approaches in parallel. Some recent and intriguing developments include: stentrodes, small electrodes inserted through the veins, making for a minimally invasive insertion procedure, brain dust, tiny wireless electrodes designed to be sprinkled throughout the brain and powered externally via ultrasound, and injectable mesh electrodes, nano-scale polymer meshes which are injected with a syringe and then unfolded in place. Considering Elon Musk’s fondness for the sci-fi term neural lace, this last approach may be what Neuralink will focus on at first.

Of course, advances in such fundamental areas as brain-computer interfaces will have far reaching implications, extending well beyond the somewhat distant goal of intelligence amplification pursued by both ventures. Indeed, if they succeed in obtaining even modest gains in the pursuit of improved neural interfaces, they will find willing collaboration partners in areas such as brain disorders treatment, fundamental brain research, control of robotic devices for paralysed patients, and many more. Although their intelligence amplification vision may be lofty and unattainable in the short term (Elon Musk mentioned a timeframe of 5 years, which is somewhere between optimistic and insane, depending what one defines as "meaningful intelligence amplification”), the immediate clinical benefits will be significant. In fact, these efforts are likely to play out similarly to the way SpaceX did—first delivering short term benefits which also happen to be financially viable (e.g. commercial rockets to deliver satellites), before eventually attaining the real goal (in the case of SpaceX, trips to Mars and beyond). Despite having directly applicable benefits, focusing communication efforts around the long term vision is smart and necessary. The hype fuels massive non-technical followings on the internet and beyond, while at the same time, articulating a well reasoned long term mission helps recruit the top minds in the field who share the same vision.

Speaking of long term vision, as Elon Musk dramatically puts it, in this case the stakes are extremely high (existential-threat-level high). With the advent of general-purpose AI around the corner (again, “around the corner” could mean anything between 5 to 100 years depending on whom you ask), the risks associated with super intelligent computers are looming ever more menacingly in the background. Beyond making entire job segments obsolete, what many are afraid of is the immeasurable harm a powerful AI could do in the wrong hands, along with the not-so-unlikely prospect that somebody may trigger an apocalypse scale disaster by mistake (Elon Musk thinks Google is

a good candidate for the biggest “oops” moment in humankind). To mitigate the dangerous aspects of AI, the approach both companies are working on (and which I have proposed in the past) is to ensure that by the time we have the technology to build super intelligent AIs, we will also have the technology to seamlessly interface our brains with computers. That way, any advance in AI technology can presumably be used to benefit human intelligence directly, making it hard for AI to become independently more advanced than human intelligence. Problem solved.

As some have been quick to point out, however, it may not be as simple as that. Indeed, one could argue that the same risks associated with super intelligent AIs could be equally problematic in the case of super intelligent humans. There is no guarantee that a super intelligent person, whose motivations, thoughts and desires we cannot begin to imagine (by definition), will not decide to act in a way that is dangerous or harmful to “lesser" humans. In fact, one could articulate plenty of scenarios where intelligence amplification leads to non-desirable futures, such as hyper-segmented societies where the rich can afford to become super intelligent while the poor are stuck with stupid old human brains, leading to a widening economic divide and increasing social tensions.

Still, all this is speculation. The only certainty is that if Neuralink and Kernel succeed, the world will benefit immensely in the short-term, especially when it comes to understanding and treating our ageing brains, a challenge we will increasingly face as humans grows older. Will these companies save us from an AI apocalypse which might never come to pass in the first place? Probably yes, in fact, but only time will tell.

In the wake of the US presidential election, an important issue has been brought up: social media is full of fake news. Some go so far as to suggest this may have significantly swayed the final result. Regardless of the answer to that question, the issue of false claims on the internet is a very profound one, and reaches much, much further than the realm of politics. There should be no sugar-coating it: misinformation is dangerous, and a potential threat to society.